Best Way to Clean Spider Webs Off House Domain_10

Spider web scraping is a task that has to be performed responsibly then that information technology does not have a detrimental issue on the sites being scraped. Web Crawlers can retrieve data much quicker, in greater depth than humans, so bad scraping practices tin have some impact on the operation of the site. While almost websites may non have anti-scraping mechanisms, some sites use measures that can lead to web scraping getting blocked, because they do non believe in open up data admission.

If a crawler performs multiple requests per second and downloads large files, an under-powered server would take a hard fourth dimension keeping up with requests from multiple crawlers. Since web crawlers, scrapers or spiders (words used interchangeably) don't really drive homo website traffic and seemingly affect the operation of the site, some site administrators do not like spiders and endeavor to block their access.

In this article, we will talk nigh the best spider web scraping practices to follow to scrape websites without getting blocked by the anti-scraping or bot detection tools.

Web Scraping all-time practices to follow to scrape without getting blocked

- Respect Robots.txt

- Make the crawling slower, do non slam the server, treat websites nicely

- Practice non follow the same crawling pattern

- Make requests through Proxies and rotate them as needed

- Rotate User Agents and corresponding HTTP Request Headers between requests

- Utilize a headless browser similar Puppeteer, Selenium or Playwright

- Beware of Dear Pot Traps

- Check if Website is Changing Layouts

- Avoid scraping data behind a login

- Use Captcha Solving Services

- How can websites detect spider web scraping?

- How do you notice out if a website has blocked or banned you ?

Basic Rule: "Be Dainty"

An overarching rule to go on in mind for whatsoever kind of web scraping is

Be GOOD AND FOLLOW A WEBSITE'S Crawling POLICIES

Here are the spider web scraping best practices yous can follow to avert getting spider web scraping blocked:

Respect Robots.txt

Spider web spiders should ideally follow the robot.txt file for a website while scraping. It has specific rules for skillful behavior, such as how frequently you can scrape, which pages allow scraping, and which ones you can't. Some websites allow Google to scrape their websites, past not allowing any other websites to scrape. This goes confronting the open nature of the Internet and may not seem fair, just the owners of the website are within their rights to resort to such behavior.

Yous tin can detect the robot.txt file on websites. Information technology is usually the root directory of a website – http://example.com/robots.txt .

If information technology contains lines like the ones shown beneath, it ways the site doesn't like and does not want to exist scraped.

User-agent: *

Disallow:/

However, since virtually sites want to be on Google, arguably the largest scraper of websites globally, they allow access to bots and spiders.

What if y'all need some data that is forbidden past Robots.txt. You could still scrape it. Near anti-scraping tools block web scraping when you are scraping pages that are non immune by Robots.txt.

What practise these tools await for? Is this client a bot or a real user? And how practice they find that? By looking for a few indicators that real users exercise and bots don't. Humans are random, bots are non. Humans are not predictable, bots are.

Here are a few easy giveaways that yous are bot/scraper/crawler –

- Scraping too fast and too many pages, faster than a human being ever tin can

- Following the aforementioned design while itch. For example – go through all pages of search results, and get to each outcome only after grabbing links to them. No human ever does that.

- As well many requests from the same IP accost in a very brusk time

- Non identifying as a popular browser. You lot tin can do this by specifying a 'User-Agent'.

- using a user agent string of a very quondam browser

The points below should get y'all past nearly of the basic to intermediate anti-scraping mechanisms used by websites to cake web scraping.

Brand the itch slower, do non slam the server, care for websites nicely

Spider web scraping bots fetch information very fast, just it is easy for a site to detect your scraper, as humans cannot scan that fast. The faster you clamber, the worse it is for everyone. If a website gets too many requests than it tin handle it might become unresponsive.

Brand your spider wait real, by mimicking human deportment. Put some random programmatic sleep calls in between requests, add together some delays after crawling a small number of pages and choose the lowest number of concurrent requests possible. Ideally, put a delay of x-20 seconds betwixt clicks and not put much load on the website, treating the website nice.

Use machine throttling mechanisms which will automatically throttle the crawling speed based on the load on both the spider and the website that you lot are itch. Adjust the spider to an optimum crawling speed after a few trial runs. Exercise this periodically because the surroundings does modify over time.

Practice not follow the aforementioned crawling pattern

Humans by and large will not perform repetitive tasks as they browse through a site with random actions. Web scraping bots tend to take the same crawling pattern because they are programmed that style unless specified. Sites that take intelligent anti-itch mechanisms can easily detect spiders past finding patterns in their actions and tin lead to web scraping getting blocked.

programmed that style unless specified. Sites that take intelligent anti-itch mechanisms can easily detect spiders past finding patterns in their actions and tin lead to web scraping getting blocked.

Comprise some random clicks on the page, mouse movements and random deportment that will make a spider await similar a man.

Make requests through Proxies and rotate them every bit needed

When scraping, your IP address can be seen. A site volition know what you are doing and if you are collecting information. They could take data such every bit – user patterns or experience  if they are outset-time users.

if they are outset-time users.

Multiple requests coming from the same IP will lead you to get blocked, which is why nosotros demand to use multiple addresses. When nosotros send requests from a proxy motorcar, the target website will not know where the original IP is from, making the detection harder.

Create a puddle of IPs that yous can utilise and apply random ones for each request. Along with this, you lot have to spread a handful of requests beyond multiple IPs.

There are several methods that tin can modify your approachable IP.

- TOR

- VPNs

- Complimentary Proxies

- Shared Proxies – the least expensive proxies shared by many users. The chances of getting blocked are loftier.

- Private Proxies – usually used simply by y'all, and lower chances of getting blocked if you keep the frequency low.

- Data Center Proxies, if you need a large number of IP Addresses and faster proxies, larger pools of IPs. They are cheaper than residential proxies and could be detected easily.

- Residential Proxies, if you are making a huge number of requests to websites that block to actively. These are very expensive (and could be slower, as they are existent devices). Endeavour everything else earlier getting a residential proxy.

In addition, various commercial providers also provide services for automatic IP rotation. A lot of companies now provide residential IPs to make scraping even easier – merely about are expensive.

Rotate User Agents and corresponding HTTP Asking Headers betwixt requests

A user agent is a tool that tells the server which web browser is existence used. If the user agent is not set, websites won't let yous view content. Every request made from a web browser contains a user-agent header and using the same user-agent consistently leads to the detection of a bot. You can get your User-Agent past typing 'what is my user agent' in Google's search bar. The only way to make your User-Amanuensis announced more real and bypass detection is to faux the user agent. Well-nigh web scrapers do not have a User Agent by default, and you demand to add that yourself.

You lot could even pretend to be the Google Bot: Googlebot/2.1 if you desire to take some fun! (http://www.google.com/bot.html)

Now, just sending User-Agents alone would get you by nigh basic bot detection scripts and tools. If yous find your bots getting blocked even afterward putting in a recent User-Amanuensis string, yous should add some more request headers.

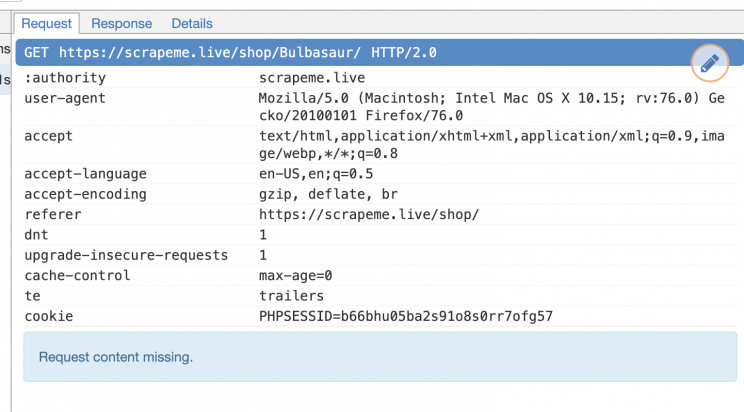

Most browsers send more headers to the websites than just the User-Agent. For example, here is a set of headers a browser sent to Scrapeme.live (Our Web Scraping Test Site). It would be ideal to ship these common request headers as well.

The most bones ones are:

- User-Agent

- Take

- Accept-Language

- Referer

- DNT

- Updgrade-Insecure-Requests

- Enshroud-Control

Do not send cookies unless your scraper depends on Cookies for functionality.

Yous tin can find the right values for these by inspecting your web traffic using Chrome Programmer Tools, or a tool like MitmProxy or Wireshark. You can also copy a curl command to your asking from them. For example

curl 'https://scrapeme.live/store/Ivysaur/' \ -H 'authority: scrapeme.alive' \ -H 'dnt: 1' \ -H 'upgrade-insecure-requests: 1' \ -H 'user-agent: Mozilla/v.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Safari/537.36' \ -H 'accept: text/html,application/xhtml+xml,application/xml;q=0.9,prototype/webp,image/apng,*/*;q=0.eight,application/signed-exchange;v=b3;q=0.9' \ -H 'sec-fetch-site: none' \ -H 'sec-fetch-way: navigate' \ -H 'sec-fetch-user: ?1' \ -H 'sec-fetch-dest: document' \ -H 'accept-linguistic communication: en-GB,en-US;q=0.9,en;q=0.8' \ --compressed

Y'all can get this converted to any language using a tool like https://curl.trillworks.com

Hither is how this was converted to python

import requests headers = { 'authority': 'scrapeme.live', 'dnt': 'one', 'upgrade-insecure-requests': '1', 'user-amanuensis': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, similar Gecko) Chrome/83.0.4103.61 Safari/537.36', 'accept': 'text/html,awarding/xhtml+xml,application/xml;q=0.nine,image/webp,image/apng,*/*;q=0.8,awarding/signed-substitution;v=b3;q=0.nine', 'sec-fetch-site': 'none', 'sec-fetch-style': 'navigate', 'sec-fetch-user': '?1', 'sec-fetch-dest': 'document', 'accept-language': 'en-GB,en-U.s.a.;q=0.nine,en;q=0.eight', } response = requests.get('https://scrapeme.live/shop/Ivysaur/', headers=headers) You can create similar header combinations for multiple browsers and start rotating those headers between each request to reduce the chances of getting your spider web scraping blocked.

Utilise a headless browser similar Puppeteer, Selenium or Playwright

![]() If none of the methods above works, the website must be checking if you are a Existent browser.

If none of the methods above works, the website must be checking if you are a Existent browser.

The simplest cheque is if the client (web browser) can render a cake of JavaScript. If it doesn't, then it pretty much flags the visitor to exist a bot. While information technology is possible to block running JavaScript in the browser, well-nigh of the Internet sites volition exist unusable in such a scenario and as a result, most browsers volition have JavaScript enabled.

In one case this happens, a existent browser is necessary in most cases to scrape the data. There are libraries to automatically command browsers such as

- Selenium

- Puppeteer and Pyppeteer

- Playwright

Anti Scraping tools are smart and are getting smarter daily, equally bots feed a lot of data to their AIs to discover them. Most advanced Bot Mitigation Services use Browser Side Fingerprinting (Client Side Bot Detection) by more than advanced methods than simply checking if yous can execute Javascript.

Bot detection tools wait for any flags that can tell them that the browser is existence controlled through an automation library.

- Presence of bot specific signatures

- Support for nonstandard browser features

- Presence of common automation tools such every bit Selenium, Puppeteer, Playwright, etc.

- Human-generated events such as randomized Mouse Movement, Clicks, Scrolls, Tab Changes etc.

All this information is combined to construct a unique client-side fingerprint that can tag one every bit bot or human.

Hither are a few workarounds or tools which could help your headless browser-based scrapers from getting banned.

Beware of Dear Pot Traps

Honeypots are systems set to lure hackers and detect whatsoever hacking attempts that attempt to gain data. Information technology is usually an application that imitates the behavior of a real system. Some websites install honeypots, which are links invisible to normal users but tin be seen by web scrapers.

![]() When following links always take care that the link has proper visibility with no nofollow tag. Some honeypot links to detect spiders will have the CSS fashionbrandish:none or will exist color disguised to blend in with the page's background colour.

When following links always take care that the link has proper visibility with no nofollow tag. Some honeypot links to detect spiders will have the CSS fashionbrandish:none or will exist color disguised to blend in with the page's background colour.

This detection is evidently not easy and requires a significant corporeality of programming work to accomplish properly, as a event, this technique is not widely used on either side – the server side or the bot or scraper side.

Bank check if Website is Changing Layouts

Some websites make information technology catchy for scrapers, serving slightly unlike layouts.

For example, in a website pages 1-20 will brandish a layout, and the balance of the pages may display something else. To forestall this, check if you are getting data scraped using XPaths or CSS selectors. If not, check how the layout is different and add a condition in your code to scrape those pages differently.

Avoid scraping information behind a login

Login is basically permission to get access to web pages. Some websites like Indeed exercise not allow permission.

If a page is protected by login, the scraper would have to send some information or cookies along with each request to view the folio. This makes it easy for the target website to see requests coming from the same address. They could take away your credentials or block your account which can, in turn, lead to your spider web scraping efforts being blocked.

Its by and large preferred to avoid scraping websites that accept a login as you volition get blocked easily, only i thing you can do is imitate human browsers whenever authentication is required you get the target data yous need.

Use Captcha Solving Services

Many websites employ anti web scraping measures. If y'all are scraping a website on a large calibration, the website will eventually block you. Yous volition starting time seeing captcha pages instead of web pages. There are services to become past these restrictions such as 2Captcha or Anticaptcha.

If y'all need to scrape websites that employ Captcha, it is better to resort to captcha services. Captcha services are relatively cheap, which is useful when performing large scale scrapes.

How can websites notice and block web scraping?

![]()

Websites can employ different mechanisms to detect a scraper/spider from a normal user. Some of these methods are enumerated beneath:

- Unusual traffic/loftier download rate especially from a single client/or IP address within a curt time span.

- Repetitive tasks performed on the website in the same browsing pattern – based on an assumption that a human user won't perform the aforementioned repetitive tasks all the fourth dimension.

- Checking if yous are real browser – A simple bank check is to try and execute javascript. Smarter tools can go a lot more and check your Graphic cards and CPUs 😉 to brand sure y'all are coming from real browser.

- Detection through honeypots – these honeypots are unremarkably links which aren't visible to a normal user but only to a spider. When a scraper/spider tries to admission the link, the alarms are tripped.

How to address this detection and avert web scraping getting blocked?

Spend some time upfront and investigate the anti-scraping mechanisms used past a site and build the spider accordingly. Information technology will provide a amend consequence in the long run and increase the longevity and robustness of your work.

How exercise you lot find out if a website has blocked or banned you?

![]()

If any of the post-obit signs appear on the site that you are crawling, it is usually a sign of being blocked or banned.

- CAPTCHA pages

- Unusual content commitment delays

- Frequent response with HTTP 404, 301 or 50x errors

Frequent advent of these HTTP status codes is also indication of blocking

- 301 Moved Temporarily

- 401 Unauthorized

- 403 Forbidden

- 404 Not Found

- 408 Request Timeout

- 429 Likewise Many Requests

- 503 Service Unavailable

Here is what Amazon.com tells you when you are blocked.

To discuss automated admission to Amazon information delight contact api-services-support@amazon.com.

For information about migrating to our APIs refer to our Marketplace APIs at <link> or our Product Advertising API at <link> for ad employ cases.

Lamentable! Something went incorrect!

With pictures of cute dog of Amazon.

You lot may also see response or bulletin from websites similar these from some pop anti-scraping tools.

Nosotros want to make sure it is actually you that we are dealing with and not a robot

Please check the box below to admission the site

<reCaptcha>

Why is this verification required? Something nearly the behavior of the browser has caught our attention.

At that place are various explanations for this:

- you are browsing and clicking at a speed much faster than expected of a human being

- something is preventing Javascript from working on your computer

- there is a robot on the same network (IP accost) as you

Having issues accessing the site? Contact Support

Authenticate your robot

or

Please verify you are a man

<Captcha>

Access to this page has been denied because we believe you are using automation tools to browse the website

This may happen as a effect of the post-obit:

- Javascript is disabled or blocked by an extension (ad blockers for example)

- Your browser does non support cookies

Delight make certain that Javascript and cookies are enabled on your browser and that you are not blocking them from loading

or

Pardon our suspension

As yous were browsing <website> something about your browser made us retrieve you were a bot. There are a few reasons this might happen

- You're a ability user using moving through this website with super-human speed

- Yous've disabled JavaScript in your web browser

- A tertiary-party bowser plugin such equally Ghostery or NoScript, is preventing Javascript from running. Boosted data is bachelor in this back up article.

After completing the CAPTCHA below, you volition immediately regain access to <website>

or

Fault 1005 Ray ID: <hash> • <time>

Access denied

What happened?

The possessor of this website (<website>) has banned the autonomous system number (ASN) your IP address is in (<number>) from accessing this website.

A comprehensive list of HTTP return codes (successes and failures) can exist found here. It will be worth your time to read through these codes and exist familiar with them.

Summary

All these ideas to a higher place provide a starting bespeak for yous to build your own solutions or refine your existing solution. If you have whatever ideas or suggestions, please bring together the discussion in the comments section.

Cheers for reading.

Or y'all can ignore everything in a higher place, and but get the data delivered to you as a service. Interested ?

Turn the Cyberspace into meaningful, structured and usable information

Source: https://www.scrapehero.com/how-to-prevent-getting-blacklisted-while-scraping/

Postar um comentário for "Best Way to Clean Spider Webs Off House Domain_10"